How to configure Route53 Public Hosted Zone + ACM Certificate + External DNS in EKS for a custom domain

In the previous post, we deployed a sample application to a managed EKS environment in AWS.

In this article, we will,

- Create a Route53 Public Hosted Zone for a custom sub-domain (app.learnwithpras.xyz in this scenario)

- Generate a Public ACM certificate for the custom subdomain.

- Deploy External DNS to the EKS environment.

- Ensure application is reachable over HTTPS.

Without further ado, lets dive right into it!

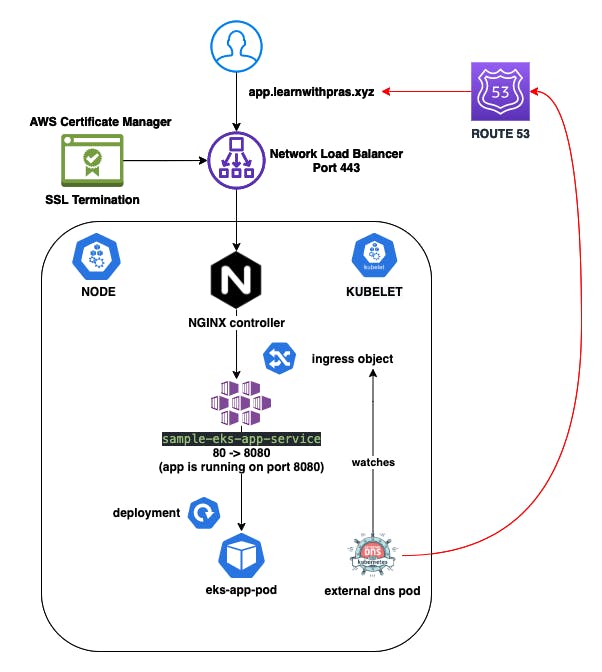

Diagram

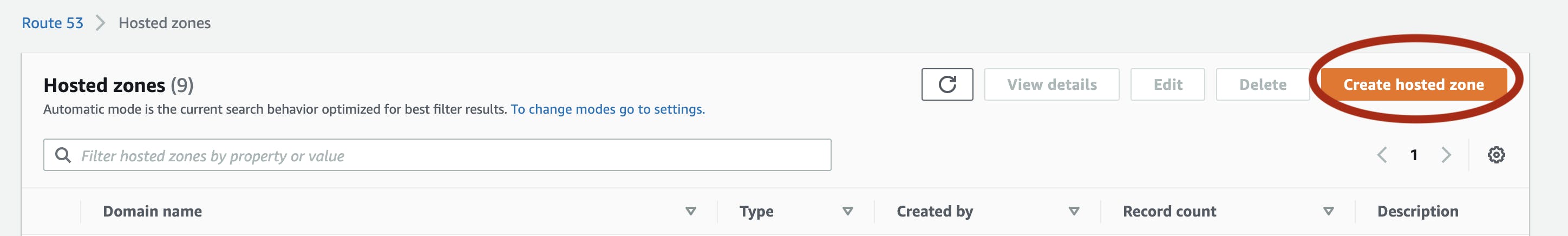

Route53 Public Hosted Zone

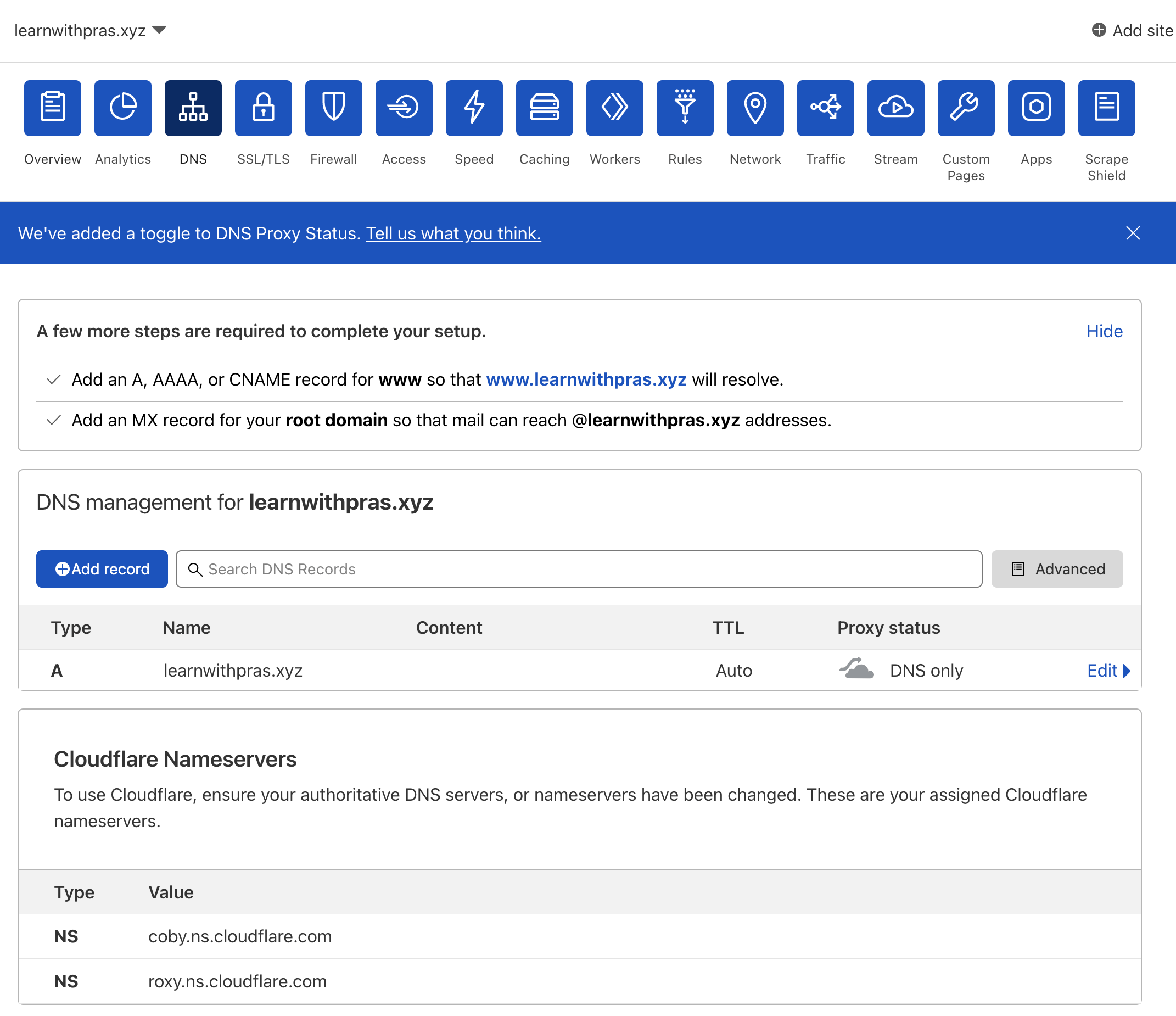

I own the domain learnwithpras.xyz and I manage the domain via Cloudflare. Cloudflare manages all of my services, including other subdomains I might have and I only want DNS traffic for subdomain app.learnwithpras.xyz to be directed to Amazon Route53 for the purpose of learning and this demo.

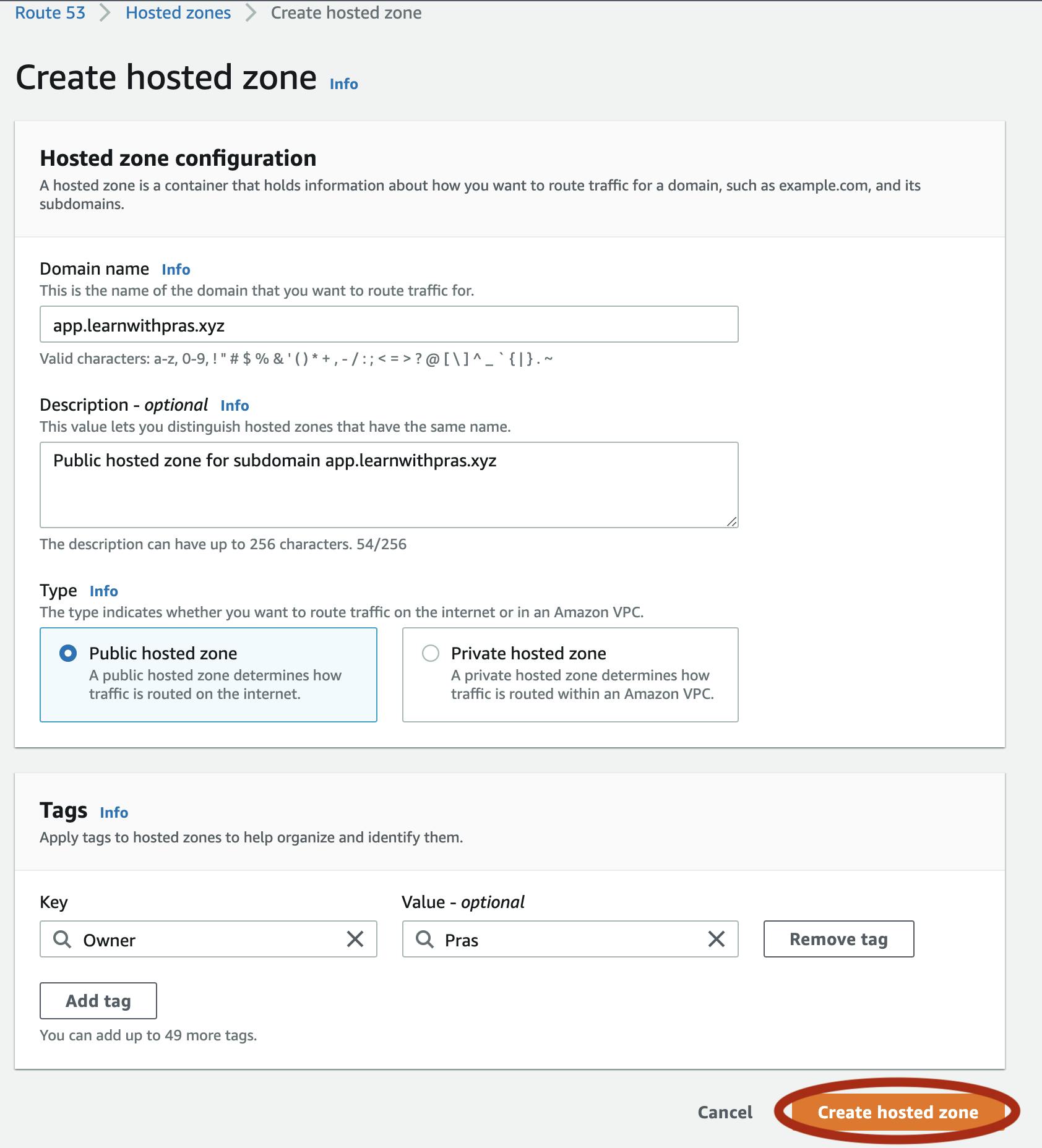

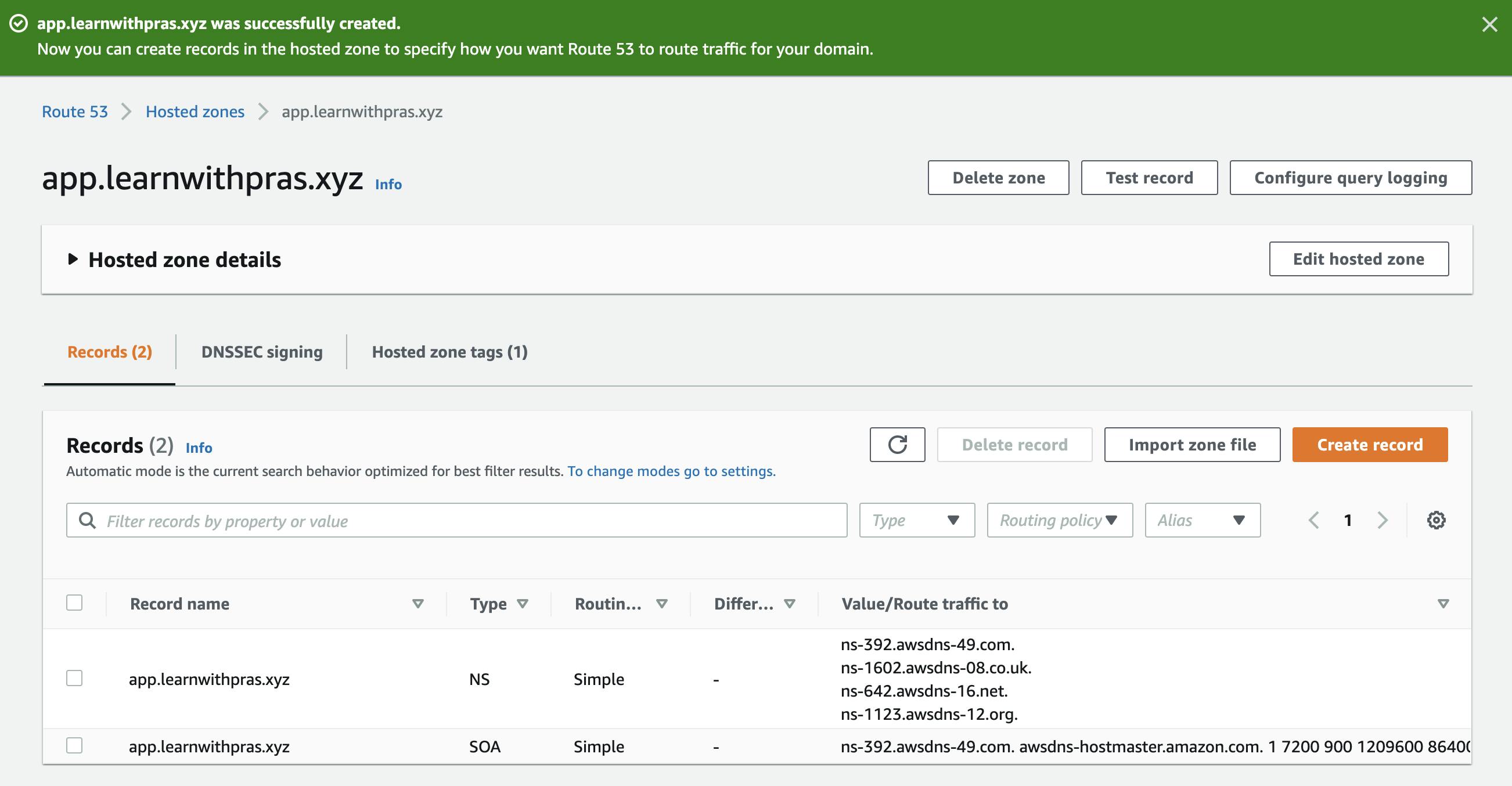

Navigate to Route53 in your AWS Account and click on Create Hosted Zone create a public hosted zone for subdomain app.learnwithpras.xyz.

For domain name, specify app.learnwithpras.xyz and optionally fill description including addition of tags. Then, click on Create Hosted Zone.

This is looking good but we want to leverage best practices as much as possible, so I am going to delete the hosted zone created manually and will use Cloudformation to deploy the public hosted zone. We will also store hosted zone ID in Systems Manager Parameter Store so that we can use it with other resources effectively. The code looks like,

---

AWSTemplateFormatVersion: 2010-09-09

Description: Create a public hosted zone for subdomain app.learnwithpras.xyz

Parameters:

DomainName:

Type: String

Description: Domain name to create public hosted zone for

Resources:

PrasDomainPublicHostedZone:

Type: AWS::Route53::HostedZone

Properties:

HostedZoneConfig:

Comment: !Sub 'Public hosted zone for subdomain ${DomainName}'

HostedZoneTags:

-

Key: Owner

Value: Pras

-

Key: Purpose

Value: Learning

Name: !Ref DomainName

PrasDomainPublicHostedZoneIDSsmParameter:

Type: AWS::SSM::Parameter

Properties:

Description: Parameter to store hosted zone ID of custom pras domain

Name: /pras/route53/app/public-hosted-zone/id

Type: String

Value: !GetAtt PrasDomainPublicHostedZone.Id

$ make deploy-hosted-zone

aws cloudformation deploy \

--s3-bucket pras-cloudformation-artifacts-bucket \

--template-file cloudformation/public-hosted-zone.yaml \

--stack-name pras-public-hosted-zone \

--capabilities CAPABILITY_NAMED_IAM \

--no-fail-on-empty-changeset \

--parameter-overrides \

DomainName=app.learnwithpras.xyz \

--tags \

Name='Route53 - Public Hosted Zone'

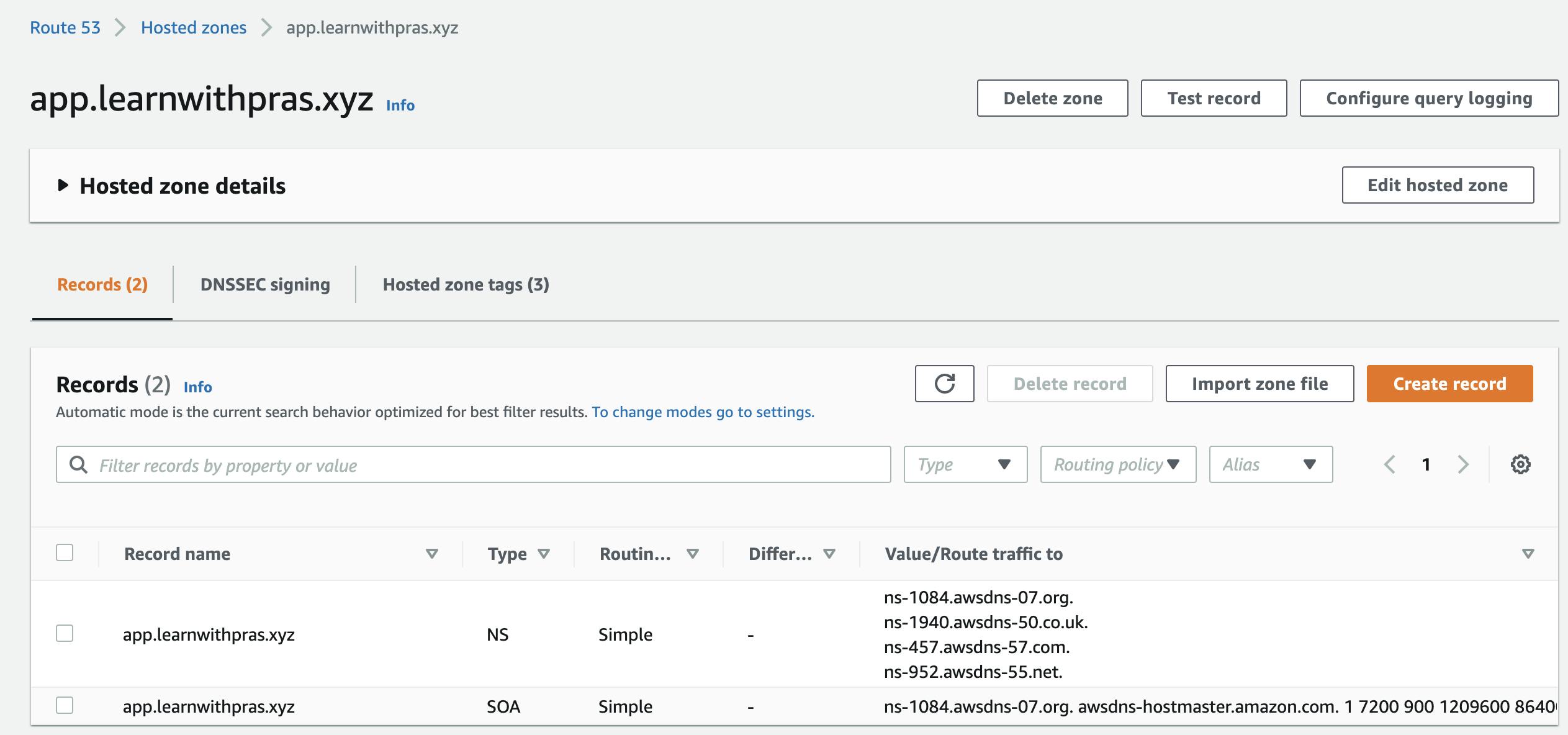

And we end up with the same result 🎉

Now that we have our hosted zone setup on AWS side, we still need to tell Cloudflare to forward any DNS traffic for subdomain app.learnwithpras.xyz to the Name Servers created by Route53 for our hosted zone.

I have prepared a small python script using Cloudflare library to add these NS records to Cloudflare zone learnwithpras.xyz,

"""

Cloudflare API client.

"""

import CloudFlare

from dataclasses import dataclass

@dataclass

class PrasCloudflare:

"""

Cloudflare API client.

"""

api_key: str

base_url: str = "https://api.cloudflare.com/client/v4/"

def __post_init__(self):

self.cf = CloudFlare.CloudFlare(token=self.api_key)

def get_zones(self):

"""

Get Cloudflare zones.

"""

# add params = {'per_page':100} if you want to get more than 50 zones

return self.cf.zones.get()

def get_zone_id(self, zone_name):

"""

Get zone id.

"""

return self.cf.zones.get(params={"name": zone_name})[0]["id"]

def get_dns_records(self, zone_id):

"""

Get dns records for a cloudflare zone

"""

return self.cf.zones.dns_records.get(zone_id)

def add_dns_record(self, zone_id, dns_record):

"""

Add dns record for a cloudflare zone

"""

return self.cf.zones.dns_records.post(zone_id, data=dns_record)

if __name__ == "__main__":

zone_name = "learnwithpras.xyz"

dns_record_name = "app.learnwithpras.xyz"

init_cloudflare = PrasCloudflare(<api_token_does_here>)

zone_id = init_cloudflare.get_zone_id(zone_name)

# Add NS records to cloudflare

dns_records = [

{"name": f"{dns_record_name}", "type": "NS", "content": "ns-1084.awsdns-07.org."},

{"name": f"{dns_record_name}", "type": "NS", "content": "ns-1940.awsdns-50.co.uk."},

{"name": f"{dns_record_name}", "type": "NS", "content": "ns-457.awsdns-57.com."},

{"name": f"{dns_record_name}", "type": "NS", "content": "ns-952.awsdns-55.net."},

]

for dns_record in dns_records:

print(init_cloudflare.add_dns_record(zone_id, dns_record))

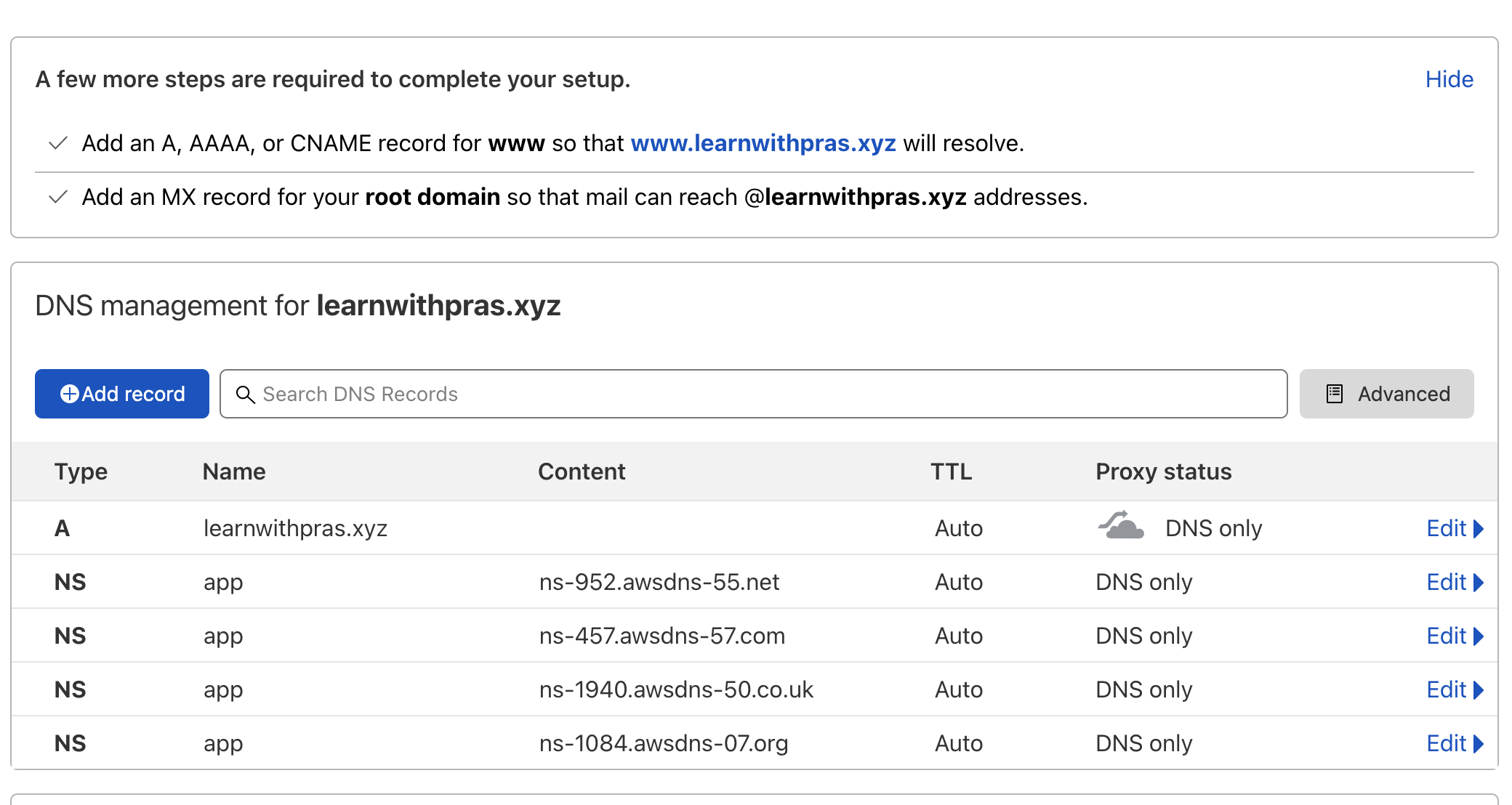

If we run the script, we can see that the NS records are successfully added to Cloudflare zone,

$ python3 src/cloudflare.py

{'id': '1ce28e7ef9e9c7e5e7976e917dc9246b', 'zone_id': '220cd9da0f4d9d90761523bfeac82643', 'zone_name': 'learnwithpras.xyz', 'name': 'app.learnwithpras.xyz', 'type': 'NS', 'content': 'ns-1084.awsdns-07.org', 'proxiable': False, 'proxied': False, 'ttl': 1, 'locked': False, 'meta': {'auto_added': False, 'managed_by_apps': False, 'managed_by_argo_tunnel': False, 'source': 'primary'}, 'created_on': '2021-09-09T21:08:59.312151Z', 'modified_on': '2021-09-09T21:08:59.312151Z'}

{'id': 'b086797c60b78cbd0149ee3b9efb877f', 'zone_id': '220cd9da0f4d9d90761523bfeac82643', 'zone_name': 'learnwithpras.xyz', 'name': 'app.learnwithpras.xyz', 'type': 'NS', 'content': 'ns-1940.awsdns-50.co.uk', 'proxiable': False, 'proxied': False, 'ttl': 1, 'locked': False, 'meta': {'auto_added': False, 'managed_by_apps': False, 'managed_by_argo_tunnel': False, 'source': 'primary'}, 'created_on': '2021-09-09T21:08:59.61448Z', 'modified_on': '2021-09-09T21:08:59.61448Z'}

{'id': 'ec4d60dbe20a5f355b57891d33ba675b', 'zone_id': '220cd9da0f4d9d90761523bfeac82643', 'zone_name': 'learnwithpras.xyz', 'name': 'app.learnwithpras.xyz', 'type': 'NS', 'content': 'ns-457.awsdns-57.com', 'proxiable': False, 'proxied': False, 'ttl': 1, 'locked': False, 'meta': {'auto_added': False, 'managed_by_apps': False, 'managed_by_argo_tunnel': False, 'source': 'primary'}, 'created_on': '2021-09-09T21:08:59.887883Z', 'modified_on': '2021-09-09T21:08:59.887883Z'}

{'id': 'df38fdfb41879e75b5203b9e7e7a8350', 'zone_id': '220cd9da0f4d9d90761523bfeac82643', 'zone_name': 'learnwithpras.xyz', 'name': 'app.learnwithpras.xyz', 'type': 'NS', 'content': 'ns-952.awsdns-55.net', 'proxiable': False, 'proxied': False, 'ttl': 1, 'locked': False, 'meta': {'auto_added': False, 'managed_by_apps': False, 'managed_by_argo_tunnel': False, 'source': 'primary'}, 'created_on': '2021-09-09T21:09:00.15107Z', 'modified_on': '2021-09-09T21:09:00.15107Z'}

(kubernetes)

If we run a dig command to fetch NS records for app.learnwithpras.xyz,

$ dig app.learnwithpras.xyz ns

; <<>> DiG 9.10.6 <<>> app.learnwithpras.xyz ns

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 54889

;; flags: qr rd ra; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 1232

;; QUESTION SECTION:

;app.learnwithpras.xyz. IN NS

;; ANSWER SECTION:

app.learnwithpras.xyz. 172800 IN NS ns-1084.awsdns-07.org.

app.learnwithpras.xyz. 172800 IN NS ns-1940.awsdns-50.co.uk.

app.learnwithpras.xyz. 172800 IN NS ns-457.awsdns-57.com.

app.learnwithpras.xyz. 172800 IN NS ns-952.awsdns-55.net.

;; Query time: 139 msec

;; SERVER: 192.168.178.1#53(192.168.178.1)

;; WHEN: Fri Sep 10 07:17:52 AEST 2021

;; MSG SIZE rcvd: 190

Create Public ACM Certificate

Lets create an SSL certificate from AWS Certificate Manager. We will use this certificate later with NGINX controller's Network Load Balancer (created as part of previous article on application deployment ) and terminate TLS session at the NLB.

Cloudformation Template

---

AWSTemplateFormatVersion: 2010-09-09

Description: Deploy ACM Certificate to use with EKS application

Parameters:

DomainName:

Type: String

Description: Domain name to issue certificate for

HostedZoneId:

Type: AWS::SSM::Parameter::Value<String>

Description: Hosted Zone in use for the domain specified

Resources:

PrasAppAcmCertificate:

Type: AWS::CertificateManager::Certificate

Properties:

DomainName: !Ref DomainName

ValidationMethod: DNS

DomainValidationOptions:

- DomainName: !Ref DomainName

HostedZoneId: !Ref HostedZoneId

PrasAppAcmCertificateArnSsmParameter:

Type: AWS::SSM::Parameter

Properties:

Description: Eks cluster name parameter

Name: /pras/eks/app/certificate/arn

Type: String

Value: !Ref PrasAppAcmCertificate

Cloudformation parameters,

NOTE: AWS::SSM::Parameter::Value resolves an SSM parameter when we specify the path

- DomainName: app.learnwithpras.xyz - will be the domain you are using

- HostedZoneId: /pras/route53/app/public-hosted-zone/id - created with the hosted zone CFN stack earlier

aws cloudformation deploy \

--s3-bucket pras-cloudformation-artifacts-bucket \

--template-file cloudformation/acm-cert.yaml \

--stack-name pras-acm-cert \

--capabilities CAPABILITY_NAMED_IAM \

--no-fail-on-empty-changeset \

--parameter-overrides \

DomainName=app.learnwithpras.xyz \

HostedZoneId=/pras/route53/hosted-zone/id \

--tags \

Name='Kubernetes Cluster Resources - ACM Cert'

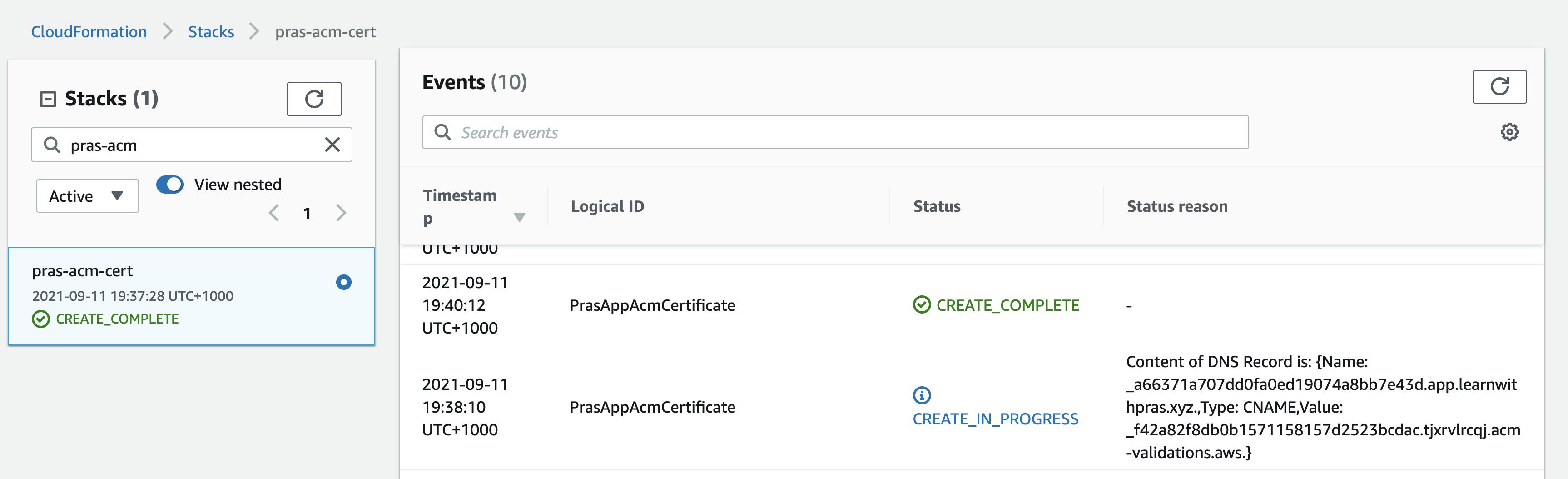

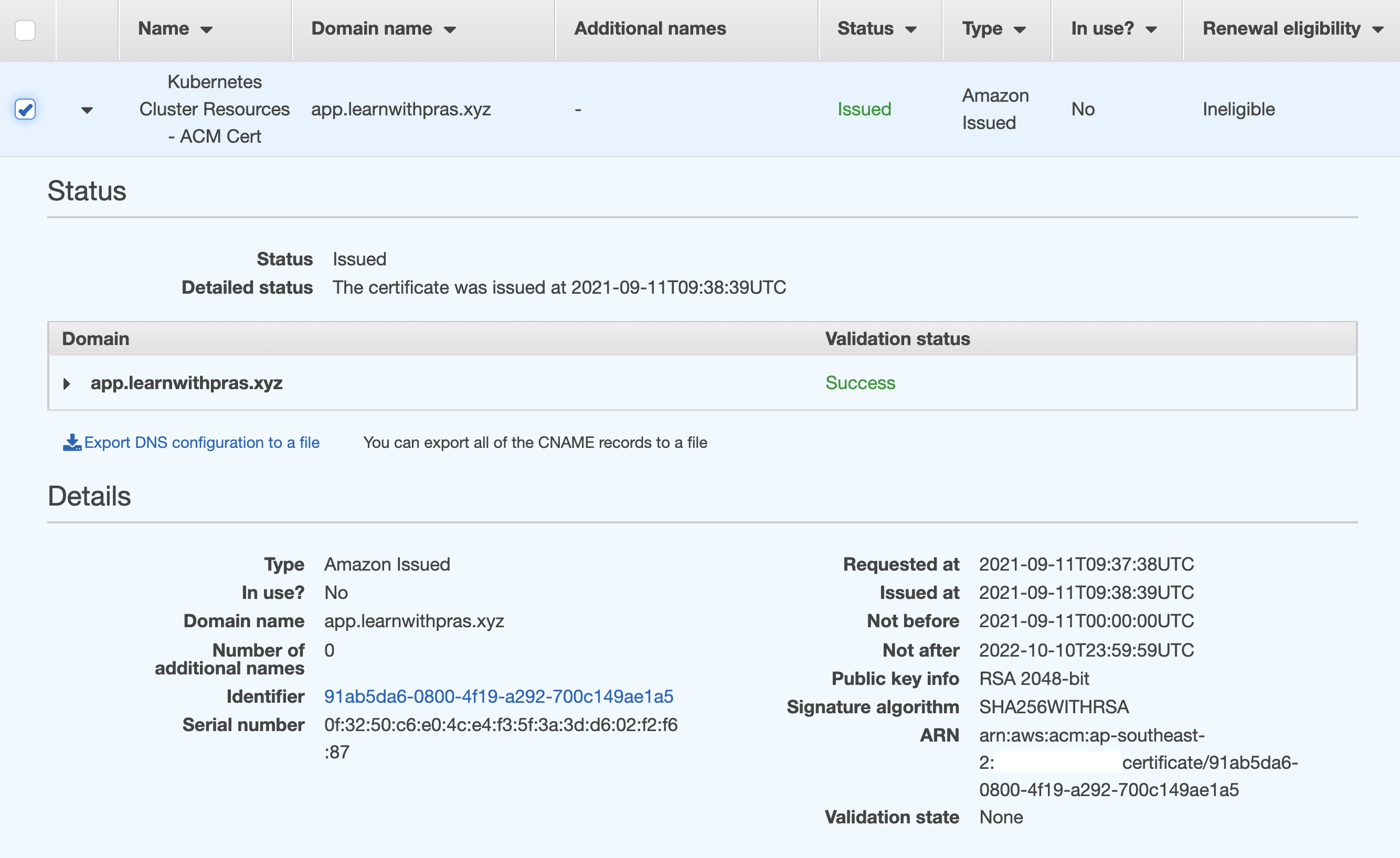

Cloudformation stack has been deployed successfully,

We used DNS validation method and so a CNAME record has been added to Route53 Hosted Zone by ACM,

Navigate to ACM Console to view the ACM certificate and its details,

Configure External DNS

External DNS makes Kubernetes resources discoverable over the public DNS servers. It maps Domain Names to Kubernetes resources dynamically by polling for changes in objects like Services and Ingresses.

We need to create an IAM Role for use with the service account mapped to External DNS pods which will have list and update permissions on Route53 Public Hosted Zone created earlier for subdomain app.learnwithpras.xyz.

---

AWSTemplateFormatVersion: 2010-09-09

Description: Deploy Managed Kubernetes Resources - External DNS Consumable Role

Parameters:

HostedZoneId:

Type: AWS::SSM::Parameter::Value<String>

Description: Public hosted zone ID in use

OidcProvider:

Type: AWS::SSM::Parameter::Value<String>

Description: EKS Cluster's OIDC provider url

ExternalDnsNamespace:

Type: String

Description: Kubernetes External DNS Namespace

ExternalDnsServiceAccountName:

Type: String

Description: Name of the Service Account used by external dns to interact with Route53

Resources:

PrasClusterExternalDnsRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument: !Sub

- |

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "${IamOidcProviderArn}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${OidcProvider}:sub": "system:serviceaccount:${ExternalDnsNamespace}:${ExternalDnsServiceAccountName}"

}

}

}

]

}

-

IamOidcProviderArn: !Sub arn:aws:iam::${AWS::AccountId}:oidc-provider/${OidcProvider}

Policies:

- PolicyName: !Sub ${AWS::StackName}-pras-eks-cluster-externaldns-policy

PolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Action:

- route53:ChangeResourceRecordSets

Resource: !Sub arn:aws:route53:::hostedzone/${HostedZoneId}

- Effect: Allow

Action:

- route53:ListHostedZones

- route53:ListResourceRecordSets

Resource: "*"

PrasClusterExternalDnsRoleArn:

Type: AWS::SSM::Parameter

Properties:

Description: Parameter to store ARN of IAM Role used by External DNS pod to access Route53 resources

Name: /pras/eks/application/dns/iam-role/arn

Type: String

Value: !GetAtt PrasClusterExternalDnsRole.Arn

Cloudformation parameters,

NOTE: AWS::SSM::Parameter::Value resolves an SSM parameter when we specify the path

- HostedZoneId: /pras/route53/app/public-hosted-zone/id - created with the hosted zone CFN stack earlier

- OidcProvider: /pras/eks/cluster/oidc-provider/url - created in the cluster creation CFN template

- ExternalDnsNamespace: external-dns - remember this as we will need to create the namespace with this name

- ExternalDnsServiceAccountName: external-dns-svc - remember this as we will need to create the service account with this name

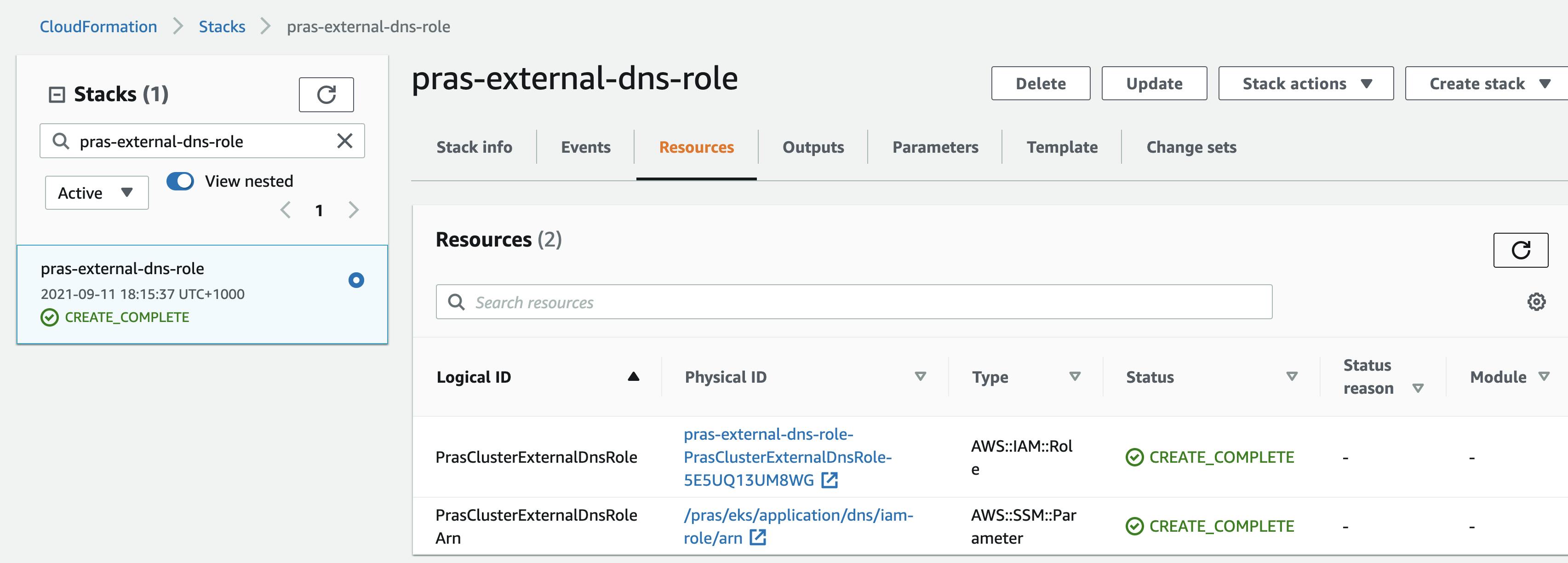

Run the aws cli command shown below to deploy the IAM role and an SSM parameter to store the ARN of the role (we will fetch this role arn and supply it to the external DNS service account's annotation),

aws cloudformation deploy \

--s3-bucket pras-cloudformation-artifacts-bucket \

--template-file cloudformation/external-dns-role.yaml \

--stack-name pras-external-dns-role \

--capabilities CAPABILITY_NAMED_IAM \

--no-fail-on-empty-changeset \

--parameter-overrides \

OidcProvider=/pras/eks/cluster/oidc-provider/url \

ExternalDnsNamespace=external-dns \

ExternalDnsServiceAccountName=external-dns-svc \

HostedZoneId=/pras/route53/hosted-zone/id \

--tags \

Name='Kubernetes Cluster Resources - External DNS Role'

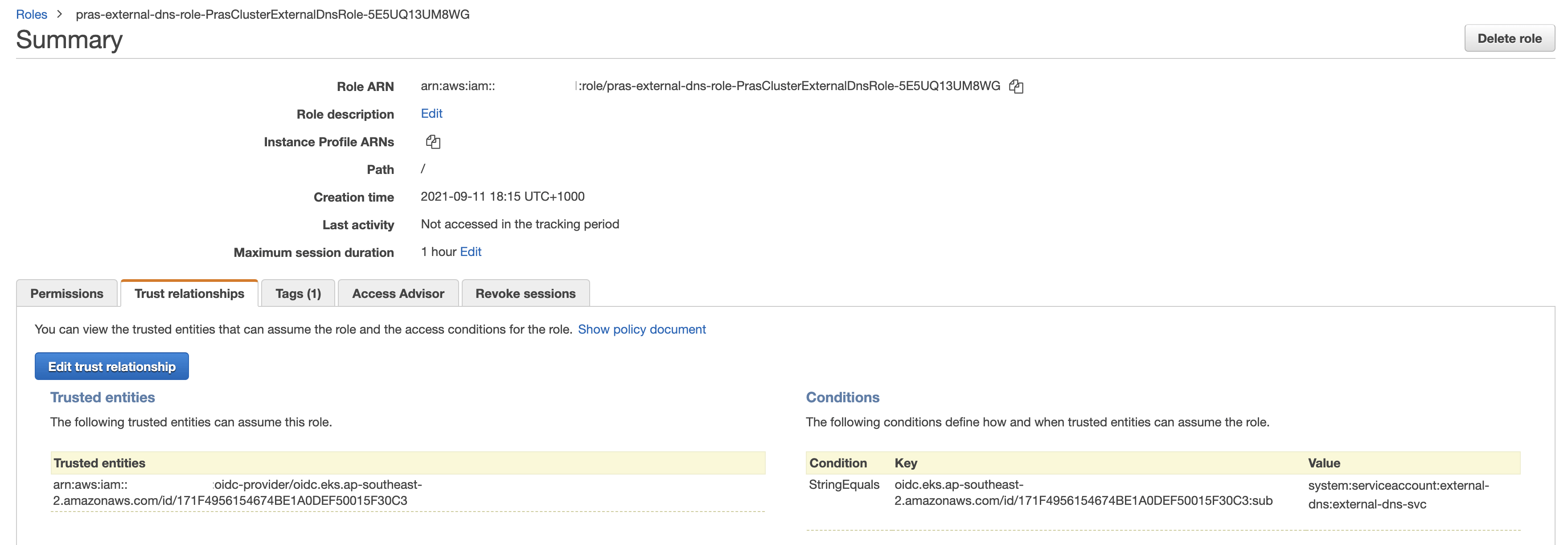

As seen from the IAM console, the role only trusts OIDC Provider created for the EKS cluster. Even better, the condition ensures that the subject that assumes this IAM role must be from EKS namespace external-dns and service account external-dns-svc; external-dns containers in any pod running in the EKS cluster that uses the service account. In this way, we do not have to add permissions to the node group's EC2 Instances' IAM role or pass in credentials to the containers for the application to interact with AWS services.

A quick run of command kubectl get all -A shows that the cluster as well as the sample eks application is up and running.

$ kubectl get all -A

NAMESPACE NAME READY STATUS RESTARTS AGE

boltdynamics pod/sample-eks-app-deployment-6c9fff7798-ckw92 1/1 Running 0 12h

ingress-nginx pod/ingress-nginx-controller-65c4f84996-9q6rg 1/1 Running 0 12h

kube-system pod/aws-node-6bph2 1/1 Running 0 12h

kube-system pod/coredns-6bfbc5f9f8-78sns 1/1 Running 0 12h

kube-system pod/coredns-6bfbc5f9f8-t4k95 1/1 Running 0 12h

kube-system pod/kube-proxy-5tflf 1/1 Running 0 12h

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

boltdynamics service/sample-eks-app-service ClusterIP 172.20.161.123 <none> 80/TCP 2d16h

default service/kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 2d16h

ingress-nginx service/ingress-nginx-controller LoadBalancer 172.20.178.68 ab6361525ae524562852af72eb047dc4-d9886e3de31f1805.elb.ap-southeast-2.amazonaws.com 80:31910/TCP,443:31719/TCP 2d16h

ingress-nginx service/ingress-nginx-controller-admission ClusterIP 172.20.142.246 <none> 443/TCP 2d16h

kube-system service/kube-dns ClusterIP 172.20.0.10 <none> 53/UDP,53/TCP 2d16h

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.apps/aws-node 1 1 1 1 1 <none> 2d16h

kube-system daemonset.apps/kube-proxy 1 1 1 1 1 <none> 2d16h

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

boltdynamics deployment.apps/sample-eks-app-deployment 1/1 1 1 2d16h

ingress-nginx deployment.apps/ingress-nginx-controller 1/1 1 1 2d16h

kube-system deployment.apps/coredns 2/2 2 2 2d16h

NAMESPACE NAME DESIRED CURRENT READY AGE

boltdynamics replicaset.apps/sample-eks-app-deployment-6c9fff7798 1 1 1 2d15h

boltdynamics replicaset.apps/sample-eks-app-deployment-7f6d79fb5c 0 0 0 2d16h

ingress-nginx replicaset.apps/ingress-nginx-controller-65c4f84996 1 1 1 2d16h

kube-system replicaset.apps/coredns-6bfbc5f9f8 2 2 2 2d16h

NAMESPACE NAME COMPLETIONS DURATION AGE

ingress-nginx job.batch/ingress-nginx-admission-create 1/1 6s 2d16h

ingress-nginx job.batch/ingress-nginx-admission-patch 1/1 7s 2d16h

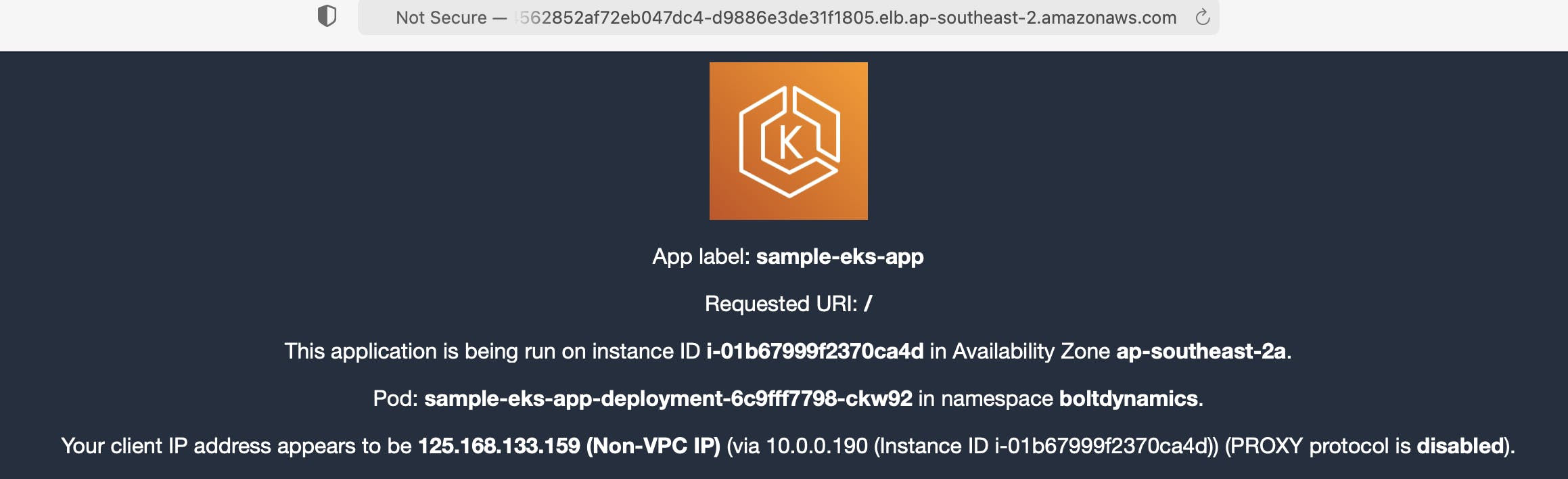

If we navigate to http://ab6361525ae524562852af72eb047dc4-d9886e3de31f1805.elb.ap-southeast-2.amazonaws.com/ which is the external IP of service/ingress-nginx-controller from the browser, we can see that the sample application is also running,

Let's get the YAML required to deploy external DNS and modify a few values,

apiVersion: v1

kind: Namespace

metadata:

name: external-dns

labels:

name: external-dns

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: external-dns-svc

namespace: external-dns

annotations:

eks.amazonaws.com/role-arn: ${EXTERNAL_DNS_ROLE_ARN}

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: external-dns-role

rules:

- apiGroups: [""]

resources: ["services","endpoints","pods"]

verbs: ["get","watch","list"]

- apiGroups: ["extensions","networking.k8s.io"]

resources: ["ingresses"]

verbs: ["get","watch","list"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: external-dns-viewer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: external-dns-role

subjects:

- kind: ServiceAccount

name: external-dns-svc

namespace: external-dns

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: external-dns-deployment

namespace: external-dns

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: external-dns

template:

metadata:

labels:

app: external-dns

spec:

serviceAccountName: external-dns-svc

containers:

- name: external-dns

image: k8s.gcr.io/external-dns/external-dns:v0.9.0

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 300m

memory: 256Mi

args:

- --source=ingress

- --domain-filter=app.learnwithpras.xyz

- --provider=aws

- --policy=sync

- --aws-zone-type=public

- --registry=txt

- --publish-internal-services

Notice how the namespace and service account names match the ones specified in the IAM role created earlier. We will use envsubst to pass in the IAM role arn and replace ${EXTERNAL_DNS_ROLE_ARN} in the yaml template.

Notice container argument source set to ingress which tells external-dns to look for dns hostnames in ingress objects. Argument domain-filter is set to our custom domain app.learnwithpras.xyz and policy is set to sync which tells external-dns to update/replace any records in Route53 to match the current state (you could use upsert-only which would prevent external-dns from deleting any records).

Run export EXTERNAL_DNS_ROLE_ARN=$(aws ssm get-parameter --name /pras/eks/application/dns/iam-role/arn --query Parameter.Value --output text) to store the IAM Role's Arn value in the variable. Finally, run envsubst < kubernetes/external-dns/external-dns.yaml | kubectl apply -f - to deploy external dns resources to the EKS cluster.

$ envsubst < kubernetes/external-dns/external-dns.yaml | kubectl apply -f -

namespace/external-dns created

serviceaccount/external-dns-svc created

clusterrole.rbac.authorization.k8s.io/external-dns-role created

clusterrolebinding.rbac.authorization.k8s.io/external-dns-viewer created

deployment.apps/external-dns-deployment created

$ kubectl get all -n external-dns

NAME READY STATUS RESTARTS AGE

pod/external-dns-deployment-778d4c597-l8nn2 1/1 Running 0 80s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/external-dns-deployment 1/1 1 1 80s

NAME DESIRED CURRENT READY AGE

replicaset.apps/external-dns-deployment-778d4c597 1 1 1 80s

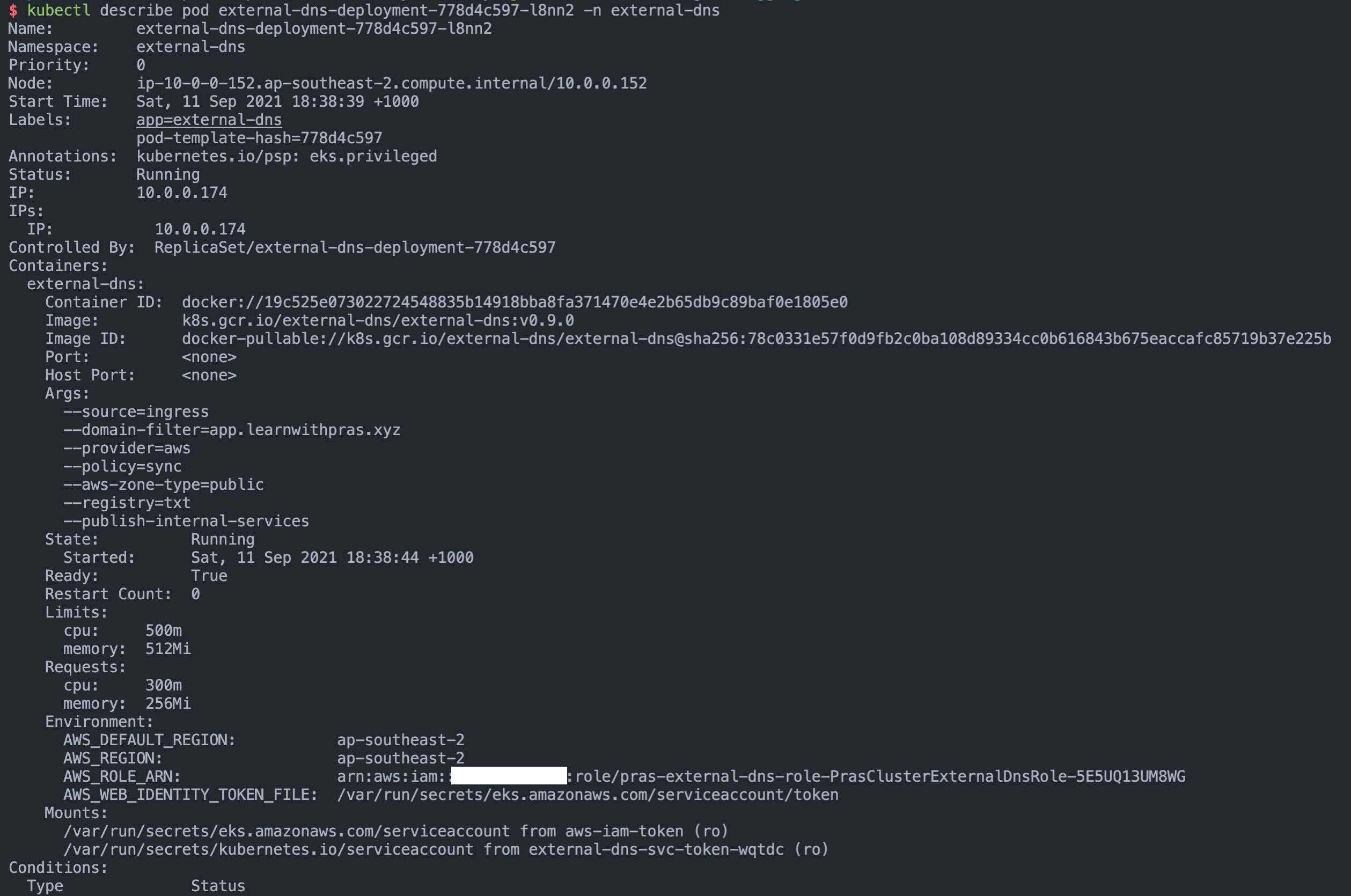

If we run kubectl describe pod external-dns-deployment-778d4c597-l8nn2 -n external-dns, we can verify that the IAM role's arn has been passed to the external dns pod,

If we run kubectl logs external-dns-deployment-778d4c597-l8nn2 -n external-dns, we can see that external-dns has synced up and is ready to add any new records to the hosted zone.

$ kubectl logs external-dns-deployment-778d4c597-l8nn2 -n external-dns

time="2021-09-11T08:38:44Z" level=info msg="config: {APIServerURL: KubeConfig: RequestTimeout:30s DefaultTargets:[] ContourLoadBalancerService:heptio-contour/contour GlooNamespace:gloo-system SkipperRouteGroupVersion:zalando.org/v1 Sources:[ingress] Namespace: AnnotationFilter: LabelFilter: FQDNTemplate: CombineFQDNAndAnnotation:false IgnoreHostnameAnnotation:false IgnoreIngressTLSSpec:false IgnoreIngressRulesSpec:false Compatibility: PublishInternal:true PublishHostIP:false AlwaysPublishNotReadyAddresses:false ConnectorSourceServer:localhost:8080 Provider:aws GoogleProject: GoogleBatchChangeSize:1000 GoogleBatchChangeInterval:1s GoogleZoneVisibility: DomainFilter:[app.learnwithpras.xyz] ExcludeDomains:[] RegexDomainFilter: RegexDomainExclusion: ZoneNameFilter:[] ZoneIDFilter:[] AlibabaCloudConfigFile:/etc/kubernetes/alibaba-cloud.json AlibabaCloudZoneType: AWSZoneType:public AWSZoneTagFilter:[] AWSAssumeRole: AWSBatchChangeSize:1000 AWSBatchChangeInterval:1s AWSEvaluateTargetHealth:true AWSAPIRetries:3 AWSPreferCNAME:false AWSZoneCacheDuration:0s AzureConfigFile:/etc/kubernetes/azure.json AzureResourceGroup: AzureSubscriptionID: AzureUserAssignedIdentityClientID: BluecatConfigFile:/etc/kubernetes/bluecat.json CloudflareProxied:false CloudflareZonesPerPage:50 CoreDNSPrefix:/skydns/ RcodezeroTXTEncrypt:false AkamaiServiceConsumerDomain: AkamaiClientToken: AkamaiClientSecret: AkamaiAccessToken: AkamaiEdgercPath: AkamaiEdgercSection: InfobloxGridHost: InfobloxWapiPort:443 InfobloxWapiUsername:admin InfobloxWapiPassword: InfobloxWapiVersion:2.3.1 InfobloxSSLVerify:true InfobloxView: InfobloxMaxResults:0 InfobloxFQDNRegEx: DynCustomerName: DynUsername: DynPassword: DynMinTTLSeconds:0 OCIConfigFile:/etc/kubernetes/oci.yaml InMemoryZones:[] OVHEndpoint:ovh-eu OVHApiRateLimit:20 PDNSServer:http://localhost:8081 PDNSAPIKey: PDNSTLSEnabled:false TLSCA: TLSClientCert: TLSClientCertKey: Policy:sync Registry:txt TXTOwnerID:default TXTPrefix: TXTSuffix: Interval:1m0s MinEventSyncInterval:5s Once:false DryRun:false UpdateEvents:false LogFormat:text MetricsAddress::7979 LogLevel:info TXTCacheInterval:0s TXTWildcardReplacement: ExoscaleEndpoint:https://api.exoscale.ch/dns ExoscaleAPIKey: ExoscaleAPISecret: CRDSourceAPIVersion:externaldns.k8s.io/v1alpha1 CRDSourceKind:DNSEndpoint ServiceTypeFilter:[] CFAPIEndpoint: CFUsername: CFPassword: RFC2136Host: RFC2136Port:0 RFC2136Zone: RFC2136Insecure:false RFC2136GSSTSIG:false RFC2136KerberosRealm: RFC2136KerberosUsername: RFC2136KerberosPassword: RFC2136TSIGKeyName: RFC2136TSIGSecret: RFC2136TSIGSecretAlg: RFC2136TAXFR:false RFC2136MinTTL:0s RFC2136BatchChangeSize:50 NS1Endpoint: NS1IgnoreSSL:false NS1MinTTLSeconds:0 TransIPAccountName: TransIPPrivateKeyFile: DigitalOceanAPIPageSize:50 ManagedDNSRecordTypes:[A CNAME] GoDaddyAPIKey: GoDaddySecretKey: GoDaddyTTL:0 GoDaddyOTE:false}"

time="2021-09-11T08:38:44Z" level=info msg="Instantiating new Kubernetes client"

time="2021-09-11T08:38:44Z" level=info msg="Using inCluster-config based on serviceaccount-token"

time="2021-09-11T08:38:44Z" level=info msg="Created Kubernetes client https://172.20.0.1:443"

time="2021-09-11T08:38:54Z" level=info msg="Applying provider record filter for domains: [app.learnwithpras.xyz. .app.learnwithpras.xyz.]"

time="2021-09-11T08:38:54Z" level=info msg="All records are already up to date"

time="2021-09-11T08:39:51Z" level=info msg="Applying provider record filter for domains: [app.learnwithpras.xyz. .app.learnwithpras.xyz.]"

time="2021-09-11T08:39:51Z" level=info msg="All records are already up to date"

time="2021-09-11T08:40:51Z" level=info msg="Applying provider record filter for domains: [app.learnwithpras.xyz. .app.learnwithpras.xyz.]"

time="2021-09-11T08:40:51Z" level=info msg="All records are already up to date"

time="2021-09-11T08:41:53Z" level=info msg="Applying provider record filter for domains: [app.learnwithpras.xyz. .app.learnwithpras.xyz.]"

time="2021-09-11T08:41:53Z" level=info msg="All records are already up to date"

time="2021-09-11T08:42:53Z" level=info msg="Applying provider record filter for domains: [app.learnwithpras.xyz. .app.learnwithpras.xyz.]"

time="2021-09-11T08:42:53Z" level=info msg="All records are already up to date"

time="2021-09-11T08:43:54Z" level=info msg="Applying provider record filter for domains: [app.learnwithpras.xyz. .app.learnwithpras.xyz.]"

time="2021-09-11T08:43:54Z" level=info msg="All records are already up to date"

We now need to update NGINX service to leverage the SSL certificate with NLB; terminate TLS connection at the load balancer.

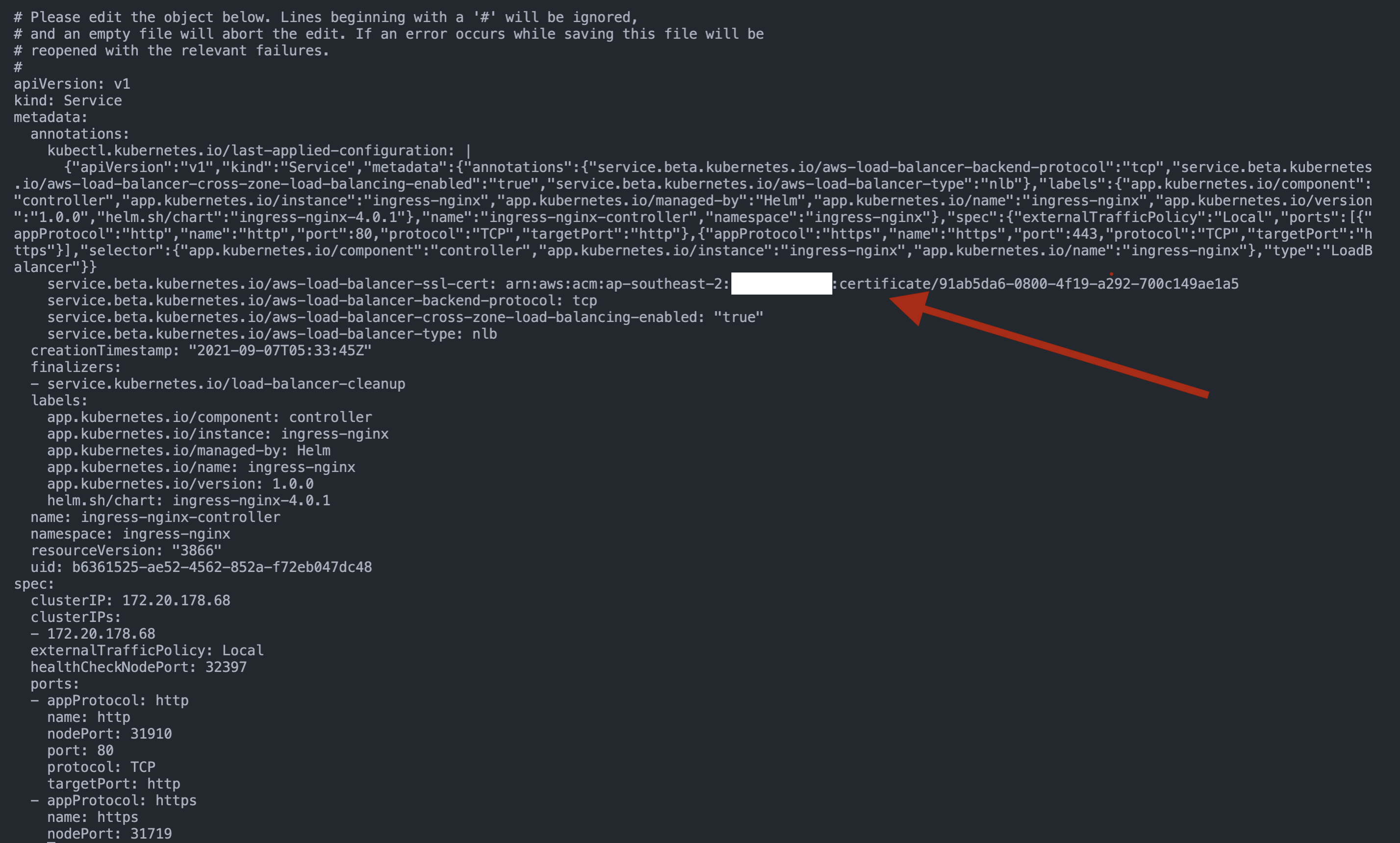

Run kubectl edit -n to edit the service resource and add an annotation, service.beta.kubernetes.io/aws-load-balancer-ssl-cert: ACM_CERT_ARN where ACM_CERT_ARN is to be replaced with the ARN of certificate we created earlier.

This presents a problem, which is where the NGINX controller attempts to create a new listener on port 443 but one already exists. Run kubectl describe svc ingress-nginx-controller -n ingress-nginx to read the events,

$ kubectl describe svc ingress-nginx-controller -n ingress-nginx

Name: ingress-nginx-controller

Namespace: ingress-nginx

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=ingress-nginx

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/version=1.0.0

helm.sh/chart=ingress-nginx-4.0.1

Annotations: service.beta.kubernetes.io/aws-load-balancer-backend-protocol: tcp

service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: true

service.beta.kubernetes.io/aws-load-balancer-ssl-cert:

arn:aws:acm:ap-southeast-2:[REDACTED]:certificate/91ab5da6-0800-4f19-a292-700c149ae1a5

service.beta.kubernetes.io/aws-load-balancer-type: nlb

Selector: app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

Type: LoadBalancer

IP Families: <none>

IP: 172.20.178.68

IPs: 172.20.178.68

LoadBalancer Ingress: ab6361525ae524562852af72eb047dc4-d9886e3de31f1805.elb.ap-southeast-2.amazonaws.com

Port: http 80/TCP

TargetPort: http/TCP

NodePort: http 31910/TCP

Endpoints: 10.0.0.145:80

Port: https 443/TCP

TargetPort: https/TCP

NodePort: https 31719/TCP

Endpoints: 10.0.0.145:443

Session Affinity: None

External Traffic Policy: Local

HealthCheck NodePort: 32397

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning SyncLoadBalancerFailed 16m service-controller Error syncing load balancer: failed to ensure load balancer: error creating load balancer listener: "DuplicateListener: A listener already exists on this port for this load balancer 'arn:aws:elasticloadbalancing:ap-southeast-2:[REDACTED]:loadbalancer/net/ab6361525ae524562852af72eb047dc4/d9886e3de31f1805'\n\tstatus code: 400, request id: c92bf3a0-4e86-461a-9b3a-7ef63189702f"

Warning SyncLoadBalancerFailed 16m service-controller Error syncing load balancer: failed to ensure load balancer: error creating load balancer listener: "DuplicateListener: A listener already exists on this port for this load balancer 'arn:aws:elasticloadbalancing:ap-southeast-2:[REDACTED]:loadbalancer/net/ab6361525ae524562852af72eb047dc4/d9886e3de31f1805'\n\tstatus code: 400, request id: a10541de-047f-4211-99ad-f680d75e360c"

Warning SyncLoadBalancerFailed 15m service-controller Error syncing load balancer: failed to ensure load balancer: error creating load balancer listener: "DuplicateListener: A listener already exists on this port for this load balancer 'arn:aws:elasticloadbalancing:ap-southeast-2:[REDACTED]:loadbalancer/net/ab6361525ae524562852af72eb047dc4/d9886e3de31f1805'\n\tstatus code: 400, request id: 70e8514f-6de6-4378-82c6-bccc5fc6b72f"

Warning SyncLoadBalancerFailed 15m service-controller Error syncing load balancer: failed to ensure load balancer: error creating load balancer listener: "DuplicateListener: A listener already exists on this port for this load balancer 'arn:aws:elasticloadbalancing:ap-southeast-2:[REDACTED]:loadbalancer/net/ab6361525ae524562852af72eb047dc4/d9886e3de31f1805'\n\tstatus code: 400, request id: 3dc34d20-59b2-4c64-abcd-9e3ba99f9ed4"

Warning SyncLoadBalancerFailed 14m service-controller Error syncing load balancer: failed to ensure load balancer: error creating load balancer listener: "DuplicateListener: A listener already exists on this port for this load balancer 'arn:aws:elasticloadbalancing:ap-southeast-2:[REDACTED]:loadbalancer/net/ab6361525ae524562852af72eb047dc4/d9886e3de31f1805'\n\tstatus code: 400, request id: 2100fd6c-42d1-4aff-8434-9f64989240cd"

Warning SyncLoadBalancerFailed 13m service-controller Error syncing load balancer: failed to ensure load balancer: error creating load balancer listener: "DuplicateListener: A listener already exists on this port for this load balancer 'arn:aws:elasticloadbalancing:ap-southeast-2:[REDACTED]:loadbalancer/net/ab6361525ae524562852af72eb047dc4/d9886e3de31f1805'\n\tstatus code: 400, request id: 4d62de0a-3dae-4857-81ac-a2efb0525abd"

Warning SyncLoadBalancerFailed 10m service-controller Error syncing load balancer: failed to ensure load balancer: error creating load balancer listener: "DuplicateListener: A listener already exists on this port for this load balancer 'arn:aws:elasticloadbalancing:ap-southeast-2:[REDACTED]:loadbalancer/net/ab6361525ae524562852af72eb047dc4/d9886e3de31f1805'\n\tstatus code: 400, request id: 10d17d55-05b2-4e66-96d0-54dcae7e8dc4"

Warning SyncLoadBalancerFailed 5m55s service-controller Error syncing load balancer: failed to ensure load balancer: error creating load balancer listener: "DuplicateListener: A listener already exists on this port for this load balancer 'arn:aws:elasticloadbalancing:ap-southeast-2:[REDACTED]:loadbalancer/net/ab6361525ae524562852af72eb047dc4/d9886e3de31f1805'\n\tstatus code: 400, request id: e87dd113-7eb8-467f-9bcf-b184e7e8116c"

Normal EnsuringLoadBalancer 55s (x10 over 4d15h) service-controller Ensuring load balancer

Warning SyncLoadBalancerFailed 54s service-controller Error syncing load balancer: failed to ensure load balancer: error creating load balancer listener: "DuplicateListener: A listener already exists on this port for this load balancer 'arn:aws:elasticloadbalancing:ap-southeast-2:[REDACTED]:loadbalancer/net/ab6361525ae524562852af72eb047dc4/d9886e3de31f1805'\n\tstatus code: 400, request id: ea5d55f9-f6f8-4c1c-b5e6-54a961a28d79"

Run kubectl get svc ingress-nginx-controller -n ingress-nginx -o yaml > nginx-service.yaml to save the existing nginx service state configuration in a file named nginx-service.yaml

Then, run kubectl delete svc ingress-nginx-controller -n ingress-nginx to delete the nginx service.

$ kubectl delete svc ingress-nginx-controller -n ingress-nginx

service "ingress-nginx-controller" deleted

This will delete the existing NGINX controller service including the Network Load Balancer associated with it. Command kubectl get all -n ingress-nginx verifies that the service is no longer present.

$ kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-controller-65c4f84996-v54dk 1/1 Running 0 47h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller-admission ClusterIP 172.20.142.246 <none> 443/TCP 4d16h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 1/1 1 1 4d16h

NAME DESIRED CURRENT READY AGE

replicaset.apps/ingress-nginx-controller-65c4f84996 1 1 1 4d16h

NAME COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create 1/1 6s 4d16h

job.batch/ingress-nginx-admission-patch 1/1 7s 4d16h

This time the listener on port 443 will be created and an SSL certificate associated with the NLB for TLS termination when we run kubectl apply -f nginx-service.yaml to redeploy the service. Also, update the target port under spec to target http port 80 (ingress sample-eks-app-ingress will listen on port 80),

- appProtocol: https

name: https

nodePort: 31719

port: 443

protocol: TCP

targetPort: http

$ kubectl apply -f nginx-service.yaml

service/ingress-nginx-controller created

$ kubectl get svc ingress-nginx-controller -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 172.20.178.68 a53c676577b9c4050a639d9a4372884e-0987ffe41fbacac9.elb.ap-southeast-2.amazonaws.com 80:31910/TCP,443:31719/TCP 32s

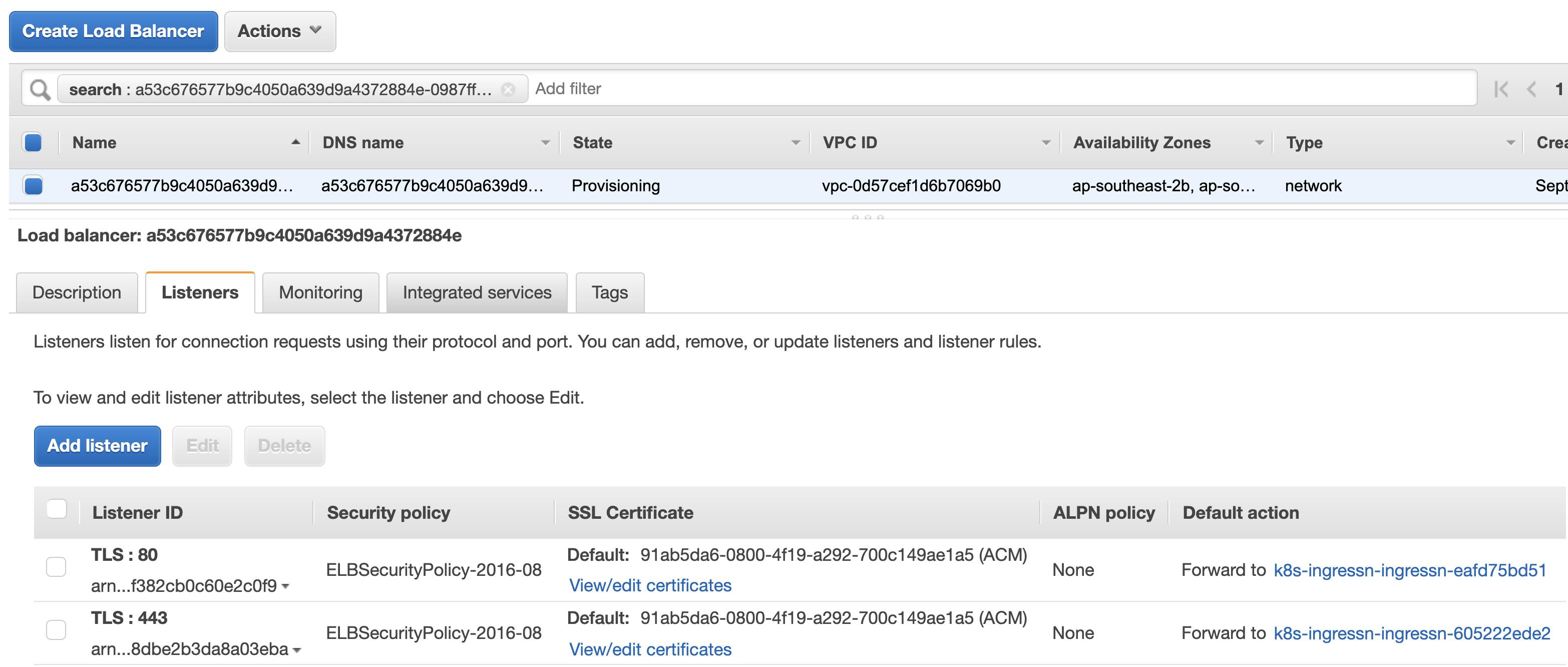

We can verify from the AWS Console that the NLB is being created with listeners and SSL certificate associated,

Apply the following ingress object with kubectl apply -f ingress.yaml,

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: sample-eks-app-ingress

namespace: boltdynamics

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: app.learnwithpras.xyz

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: sample-eks-app-service

port:

number: 80

View external-dns pod logs to see if its attempted to add records to hosted zone,

$ kubectl logs external-dns-deployment-778d4c597-l8nn2 -n external-dns

time="2021-09-11T23:13:58Z" level=info msg="Applying provider record filter for domains: [app.learnwithpras.xyz. .app.learnwithpras.xyz.]"

time="2021-09-11T23:13:59Z" level=info msg="Desired change: CREATE app.learnwithpras.xyz A [Id: /hostedzone/Z07438702O3MLG3B1HLEU]"

time="2021-09-11T23:13:59Z" level=info msg="Desired change: CREATE app.learnwithpras.xyz TXT [Id: /hostedzone/Z07438702O3MLG3B1HLEU]"

time="2021-09-11T23:13:59Z" level=info msg="2 record(s) in zone app.learnwithpras.xyz. [Id: /hostedzone/Z07438702O3MLG3B1HLEU] were successfully updated"

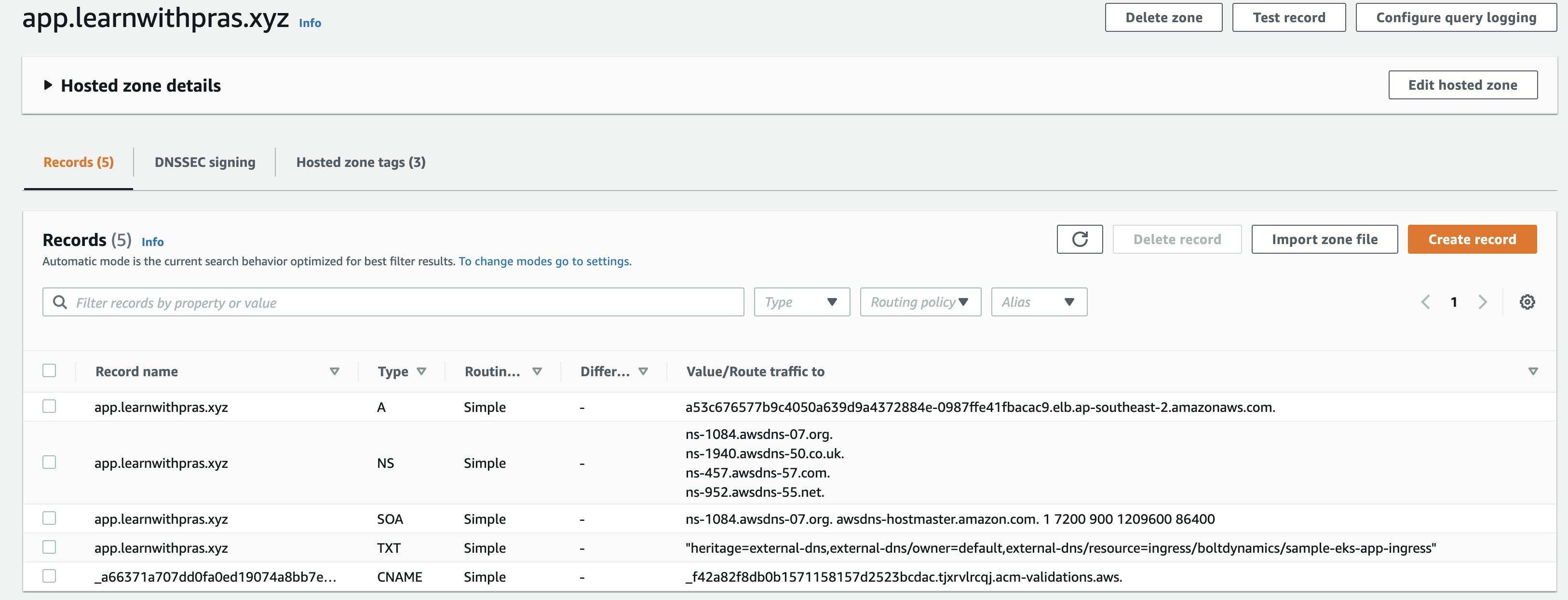

And navigate to Route53 Console and check if records have been added to the hosted zone,

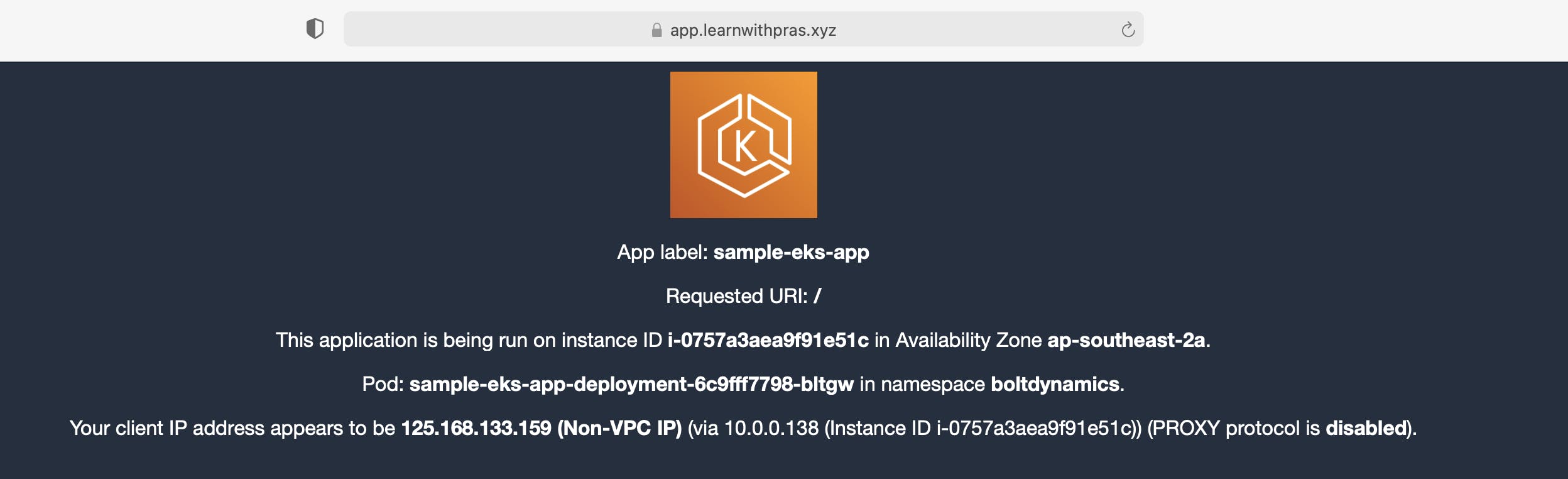

Navigate to a web browser and to app.learnwithpras.xyz,

This brings us to the end of this article, thank you for reading all the way and I hope I was able to articulate the use of a custom domain to front an application deployed in an EKS environment and all the steps that are necessary to integrate it fully using different tools and techniques.

In the next article, we will implement TLS termination at the Nginx Controller using LetsEncrypt vs at the NLB as we have done as part of this demo.

Stay tuned and stay safe 👋